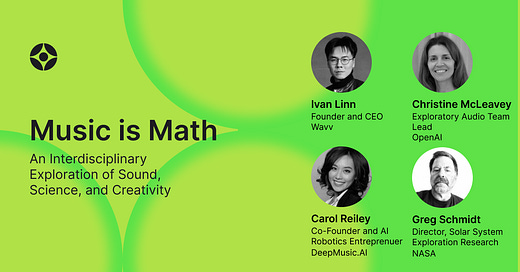

Between eclipses and brainwaves: field notes from OpenAI’s Music is Math event

Reflecting on AI-generated performances, philosophical debates, and the next frontier of creative collaboration

Experimenting with a new format: I was invited to an in-person event on music and mathematics at the OpenAI office, am and it was very thought-provoking.

Here’s a quick field notes summary of the event.

Field Notes: OpenAI Music is Math Event

Date: Wednesday, February 26, 2025

Location: OpenAI’s San Francisco office

Purpose: Exploring AI’s role in music and creativity, featuring performances and discussions on AI-generated music and interdisciplinary approaches to sound.

Select people

Ivan Linn (Wavv) – Discussed AI’s role in music creation and evolution.

Christine McLeavey (OpenAI) – Shared insights on AI tools for music composition.

Carol Reiley (DeepMusic.AI) – Spoke on AI adoption in the music industry.

Greg Schmidt (NASA) – Presented on the AI-powered solar eclipse project.

Highlights

Performance 1: AI-generated solar eclipse music

This piece was composed using AI, translating data from the 2024 solar eclipse into sound. A pianist and violist performed the composition.

The music felt more like ambient background sound rather than actual music, lacking distinct motifs or traditional themes.

Notably, the violist’s playing technique was unconventional for classical viola, using a gliding bow movement that may be specific to generative AI-style music.

Performance 2: Heartbeat and EEG-driven composition

This piece was played by a pianist and violinist while conducted by the composer’s heartbeat and a listener’s EEG signals. The EEG signals seemed to malfunction during the performance and the heartbeat functioned more like a metronome.

While an intriguing concept, the execution was not entirely successful, though it raised the potential for dynamically conducted music based on neurological responses.

Panel discussion on AI’s role in music

The discussion explored deep philosophical questions but lacked concrete solutions for AI adoption in music.

A major theme was the challenge of encouraging musicians to embrace AI tools, given that many artists are hesitant to adopt new technologies.

Carol Reiley shared an example of partnering with Hilary Hahn, a renowned violinist, to make AI music tools more relatable to traditional musicians.

Ivan Linn highlighted the natural evolution of musical styles, comparing AI-driven changes to past shifts from Baroque to Classical to Romantic periods and beyond.

A fellow attendee introduced the idea that music's evolution has often co-evolved with advancements in instrument technology—e.g., the acoustic guitar becoming the electric guitar with transistors, leading to synthesizers and beyond.

Related thoughts

The debate over whether AI enhances creativity or merely increases originality was a recurring theme. Some academic attendees argued that AI-generated music lacks true creativity, while panelists like Christine McLeavey countered that AI tools enable greater creative freedom.

McLeavey, a Juilliard-trained pianist, shared that while she excelled at performance, AI tools have allowed her to compose in ways she previously couldn't. This led to the thought-provoking question: What does the AI-augmented ("centaur") musician of the future look like?

The event leaned more toward theoretical exploration than practical applications but opened up new avenues for discussion on AI’s role in artistic expression.